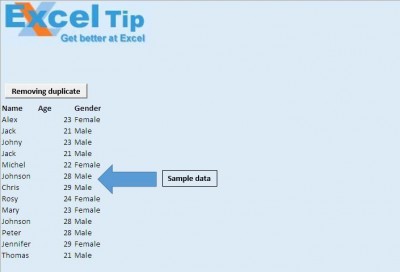

I know my main issue seems to be with API-unsupported, but it does happen with V9 sometimes (as per the other customer who started this thread). Vazgen - I have a suppor ticket 1161614 open currently. On the V9 API the duplicates are rarely occurring but do sometimes occur. So, at the moment as far as I can see the duplicates are consistently occurring on API-unsupported at various levels. I also tried removing the resourceContours from the data being returned in case this was causing the issue, but it hasn't helped. Troubleshooting the API-unsupported API I have tried bypassing Akamai cache, with no improvement. This morning, the V9 API has given me no duplicates at all, whilst API-unsupported is giving lots of duplicates again. It did this a few times and so I thought perhaps there had been some issue with the infrastructure which was getting better. Then, the API-Unsupported queries ran with very few duplicates. I saw significanlty fewer duplicates, but there were definitely still some of them. Late yesterday when I was troubleshooting, I setup queries to the V9 Production API for comparison. Yes, I know the whole point of it is that it is not supported and we shouldn't be relying on it but this is the only way we can get this data and it's worked OK until now. We have been using the api-unsupported so that we can get access to the resource contour data. Do you know if there is any other way I force it not to run on a parallel thread? Some more background if it helps: I will try adding a delay per Vazgen's suggestion.perhaps the issue is that the second query is coming in a little too quickly after the previous one. These queries are run sequentially, then the results are appended. Hi Den Hoed - AtAppStore and Babayan I'm pretty sure I'm already doing what you're both saying. Babayan and Den Hoed - AtAppStore if you have any gems of advice, I'd really love to hear them. I'm going to log a support ticket as well but don't see this as a quick fix. It seems pretty random as to which records are duplicated/excluded each time, so if it's random enough I should hopefully get fairly close to the full set of records. However I'm thinking (as a workaround) I may be able to run the full sets of queries 2 or 3 times then append the results and remove duplicates. I don't think I could even try your "not a pretty solution" of re-running the set of queries multiple times because I will always get duplicates at this scale. However, for sets of 50,000+ records, I'm generally getting around 600 duplicates (and missing, I think, the same number of unique records). I've found that once I get it down to around 14000 records (7 pages) it usually doesn't give me duplicates. As you found, the number of duplicates really vary for different executions.

Hi Martin, I've now come across this issue and the behaviour is exactly the same as you describe. Has anyone seen this problem before, and possibly know of a way to mitigate it? Thanks in advance. I have tried retrieving all fields or only the ID, the situation is still the same. The problem happens for all object types I have tried (where I have more than 2000 entries to look up), and the duplicates tend to pop up in the last chunks of 2000 objects that I retrieve. For now, I can hack the issue away by simply running the full search again and again, until I get no duplicates, but that's obviously not a pretty solution. The lookup is done in a very simple C# console application, which doesn't save anything between executions, so I don't think that there is any caching going on on my end. Sometimes the second lookup has entirely unique values, sometimes it's the third full lookup. I still get the exact same number of entries, but now with fewer duplicates and more unique entries. Things get weird when I try to run the same search again, a relatively short time after: the number of duplicates decreases. This also means that there are 12 entries that were not retrieved, because the duplicates have "pushed" them out of the result. An example: When looking up 14.038 task entries (2000 at a time) and then comparing their IDs, I find 12 IDs that are duplicates of entries already retrieved. I have noticed a problem, when I look up large numbers of objects over the Workfront API.

0 kommentar(er)

0 kommentar(er)